Abstract

The purpose of the study was to determine how music teachers respond to DREAM, a virtual space for exchanging information about digital learning tools. The research determined how teachers experienced DREAM during beta testing in terms of (a) navigation, (b) search and browse functions, and (c) quality of resources. Data were collected from over 80 music teachers using focus groups, questionnaires, log sheets, and site analytics to determine if the tool met their teaching needs. Participants in the final phase of testing completed a questionnaire about professional context and skill level using computers and mobile devices and also filled out log sheets at the end of each of four testing sessions. User experiences were analyzed in terms of usefulness, efficiency, and satisfaction. With each new phase of beta testing, navigation and search mechanisms were improved and more resources were added by the participants. By the end of the study, DREAM provided an intuitive and efficient tool for a range of music teaching needs. The results of the usability study give strong support for the importance of beta testing, which ultimately led to the creation of an effective and attractive tool for studio music teachers to keep abreast about digital technologies. In a profession where many teachers experience isolation, DREAM has the potential to serve as a vital site to enable teachers to find rich digital resources to enhance their music teaching practices.

Keywords: Music education, digital tools, usability

Introduction

Digital applications for music education are growing at an astounding rate and are changing the ways people teach, learn, and make music (Beckstead, 2001; Burnard, 2007; Partti, 2012; Rainie & Wellman, 2012; Waldron, 2013; Wise, Greenwood, & Davis, 2011). Accessing reliable information about these new tools is important for music teachers so that they can assess the appropriateness of such tools for their students’ needs. Unfortunately, teachers are perennially time-starved and are unable to systematically examine and evaluate the digital resources that are available. Classroom teachers often rely on interactions with their colleagues to learn about new technologies. However, independent music teachers work in isolation (Feldman, 2010), making these informal discussions about resources more unlikely and certainly less than comprehensive. Thus, a tool that provides a centralized place where independent music teachers can keep abreast about high quality digital technologies for their field has the potential to assist music teachers in substantial ways.

The Digital Resource Exchange About Music (DREAM) is a digital tool designed to provide teachers with digital resources related to music education and to studio instruction. DREAM is part of a suite of digital tools developed by the multi-institutional Canadian partnership between Queen’s University, Concordia University, and The Royal Conservatory (www.musictoolsuite.ca).

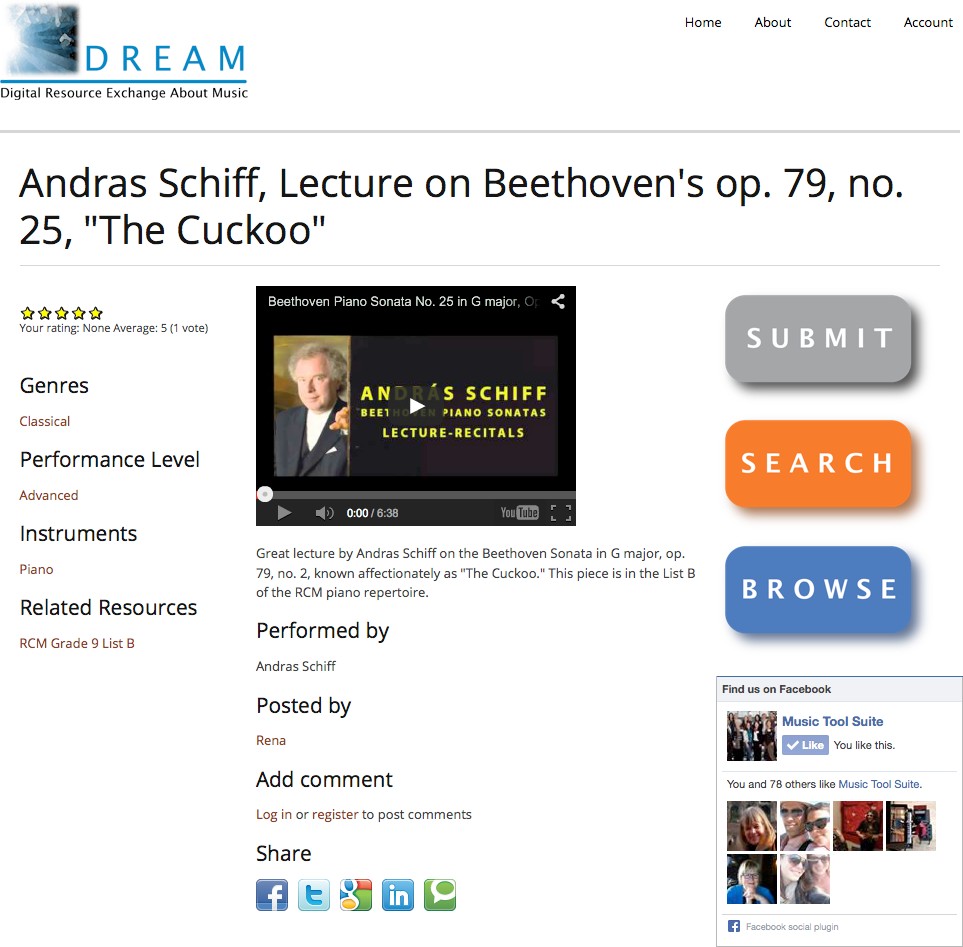

DREAM enables music teachers to search for resources, evaluate resources, to read about other teachers’ views of the resources, and to add resources of their own to the DREAM repository. In the release version (v. 1.4) DREAM resources were organized into six broad categories: (a) musical repertoire, (b) practising, (c) ear/sight, (d) creating/composing, (e) theory/history, and (f) professional resources. All of the resources are searchable by title and key words, and users can also filter the resources by instrument, ability level, or platform (e.g., used on computer, tablet, or smartphone). DREAM also recommends resources to users based on their prior choices.

In this age of ubiquitous and easily accessible digital tools, it is essential that the DREAM tool operates in a way that is seamless and efficient for intended users. Thus, before releasing DREAM to the public, a multi-phase usability testing protocol for DREAM was designed. This research study describes how DREAM evolved with the input of 12 core test participants and designers, a group of 24 classroom music teachers enrolled in a teacher education program, as well as 47 studio music teachers representing eight of the thirteen provinces and territories in Canada. Most of the final group of beta testers were from Ontario (51%). All regions of Canada were represented with the exception of the northern territories.

Related Literature

Usability testing refers to the examination of how intended users interact with a new tool. Usability testing is the most common way for software and hardware developers to see how users actually interact with their tool before it is released for public use. The process of usability testing involves learning from test participants that represent the target audience—in our case, Canadian independent music teachers—by determining the degree to which the product meets its goals (Rubin & Chisnell, 2008; Yadrich, Fitzgerald, Werkowitch, & Smith, 2012).

Methods of usability testing include ethnographic research, participatory design, focus group research, surveys, walk-throughs, closed and open card sorting, paper prototyping, and expert evaluations, among others (Rubin & Chisnell, 2008). These methods can be either formative or summative in nature. All of these forms of testing allow the designers and developers to see if the product’s design matches the users’ expectations and supports their goals (Barnum, 2011). The present study employed participatory design, focus group research, and surveys, and was formative in nature. The design work and technical modifications continued throughout the beta testing phases.

Beta testing begins when a tool is complete in terms of the intended features, but is likely to still have bugs, may have speed/performance issues, and is still open to potential design changes. The beta release usually marks the first time that the software is made available outside the organization that developed it; users of the beta version are the beta testers (Barnum, 2011). Another function of the beta version is for demonstrations and previews, as the software is generally stable enough for such demonstrations to take place (Rubin & Chisnell, 2008). Indeed, these types of demonstrations and previews were provided to stakeholders throughout the beta testing process during the time that the research reported here took place.

While many developers have expertise that informs product design, it is also important to understand the experience of the user who has not background information about the product. As Barnum (2011) states, “From the moment you know enough to talk about a product—any product, whether it’s hardware, software, a video game, a training guide, or a website—you know too much to be able to tell if the product would be usable for a person who doesn’t know what you know” (p. 9). For this reason, in the final phase of beta testing, the DREAM URL was shared with 47 teachers who had about the tool, allowing the researchers to determine how DREAM would be received by teachers who were new to DREAM, and to compare their reactions against those of the 12 core teacher participants and designers.

Rubin and Chisnell (2008) further assert that a usable product must be “useful, efficient, effective, satisfying, learnable, and accessible.” (p. 4). In a similar vein, Barnum (2011) states that tools should be easy to learn, easy to use, intuitive, and fun. Barnum notes that the International Organization for Standardization (9241-11) defines usability as, “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use” (p. 11). Rubin and Chisnell embellish this definition of usability by noting that one of the most important aspects of making something usable is the “absence of frustration in using it … [so that] the user can do what he or she wants to do the way he or she expects to be able to do it, without hindrance, hesitation, or questions” (p. 4). The parallel idea in Barnum’s (2011) work is the notion that usability should be invisible, that is, the built-in usability of products suits the user so that the user doesn’t have to “bend to the will of the product” (p. 1). Derived from these three sources, the central purpose of this study was to determine the extent to which beta testers found DREAM to be efficient, useful, and satisfying. Broadly conceived, this study is about the usability of DREAM.

Method

The research and software development was conducted using a user-centered design process. Early in the design process, a group of core test participants commented on the emerging design, and were directly in contact with the design team throughout the development cycle. The study involved an iterative research and design approach, where test participants evaluated the tool in terms of its usefulness, efficiency, and satisfaction at each of the four phases of beta testing that took place and are reported here.

Purposeful sampling techniques were used to ensure that a wide array of music teachers was represented in the first three phases of beta testing in order to learn how different types of users interacted with DREAM. Participants for the first three phases of this usability study included independent Canadian music teachers, and in addition, for the first phase, 24 classroom music teachers enrolled in a teacher education program also took part. In the fourth and final phase, a random sample of 1,000 email contacts was generated through The Royal Conservatory database of teachers. These people were sent an e-mail invitation to participate in this study; 47 took part.

Data were collected using focus groups, questionnaires, and site analytics. Phase 1 was conducted using log sheets. Phase 2 involved focus groups. Phase 3 participants were given a short questionnaire to complete, which served as a pilot questionnaire for the questionnaires used in Phase 4. In Phase 4, participants were asked to complete a short questionnaire at the outset to elicit information about their professional context (what they taught, how long they had been teaching) and their perceived skill level using computers, tablets, and smartphones (see Appendix). These participants were asked to login to DREAM at least four times over a three-week period. During that time, they were asked to browse the website, add resources, and/or add reviews of resources. At the end of each session, these participants filled out a short electronic log sheet, accessible directly through DREAM, which asked specific questions about their experiences related to efficiency, usability, and satisfaction. Site analytics were used to determine how frequently teachers accessed DREAM, which functions they used to access resources, and how often they made comments or uploaded resources. At the completion of the usability test period, participants completed an exit survey to gauge their overall perceptions of DREAM (see Appendix).

Open-ended questions from the focus groups as well as the log sheet data were analysed, guided by usability literature described previously. Descriptive statistics were compiled from the closed-ended questions from all types of questionnaires. The analysis focused on determining how easy it was to learn to navigate the site, whether the search and browse functions were effective, and whether users were able to add, comment, and rate the resources. These features, in turn, allowed us to ascertain the specific aspects of DREAM that contributed or detracted from whether it was usable, efficient, satisfying to use. Results from each iterative phase of analysis were communicated to the designers and developers in a dynamic fashion, often involving daily communication between the researchers and design team.

Results

There were four phases of usability testing as indicated in Table 1. Each of the four phases is described in turn, summarizing the user responses, changes made as a result of user feedback, and which aspects of participant experience (usefulness, efficiency, and satisfaction) were most salient for each phase of development.

The first phase of testing involved 24 classroom-based music teachers who were in their final term of the teacher education program at Queen’s University, Kingston, Canada. They tested DREAM on a variety of devices, including smartphones, tablets, and laptops representing iOS, Windows, and Android platforms. They filled out log sheets at the end of the testing period. From the outset, the overall reaction to the idea of DREAM was positive. Users were uniformly delighted at the richness of the resources in the collection. Even in the first version (v. 1.0), it was clear that DREAM had potential to be both an andtool. However, beta testers cautioned that it was important that the resources were of high quality, especially the recordings of repertoire that students might access when preparing for examinations. Issues of quality were regarded as having an impact on the overall usefulness of the tool. These comments were also made by institutional stakeholders for whom the tool was demonstrated during the beta testing period.

Despite the overall positive response, there were a number of difficulties experienced in navigating the tool, which made the tool lessto use. Test participants found it confusing when formats between pages were not consistent, which reduced the of the tool, as too much time was spent learning to read each new page. Some participants stated that they found the interface “too busy” and “not contemporary,” again making the tool less than it might be, especially when considering Rubin and Chisnall’s (2008) criterion of absence of frustration, and Barnum’s (2011) claim that tools should be intuitive and fun.

In addition to navigation and design issues, technical difficulties were also encountered in Phase 1 beta testing. For example, error messages appeared that made no sense to the users. There were also frustrations with account creation, login and password retrieval. These technical concerns were all addressed within a week of the first phase of testing. Navigation and design issues were not addressed until the results from Phase 2 were assessed.

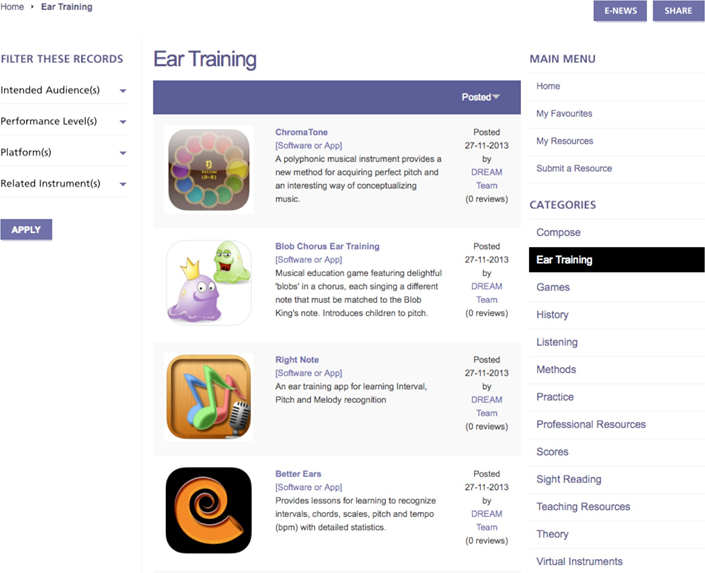

The second phase of testing involved the core group of independent music teachers and DREAM designers. There were two focus groups: the first with teachers, and the second with both teachers and designers, with overlap from the first group. These testers responded similarly to the 24 music teachers in the first phase regarding navigation and overall design. Considerable discussion took place during both focus groups about the search function, which users found to be largely ineffective. In addition, participants were very concerned that the design was not fully responsive for mobile platforms. Finally, concerns were expressed regarding the number of categories. As a result, the original 13 categories were compressed into 6 categories, with two layers of sub-categories as shown in Table 2.

The most pressing concern expressed by the second focus group, however, was that the design was not satisfying. First, the look was less contemporary and more “busy” than users desired (see Figure 1). Second, as noted above, the tool was not responsive in design for smartphone and tablet mobile devices. Consequently, a major re-design process took place after the feedback from Phase 2 was collected. This re-design was not originally anticipated, and involved a complex migration process from a Drupal 6 to a Drupal 7 environment, as well as the use of a responsive theme as a basis for the re-design. The migration process took a full seven weeks. After the records were migrated, another five weeks were spent modifying the design.

While this re-design process meant that the launch date for DREAM was delayed, the project leaders and designers felt that the re-design was essential to the ultimate success of the tool, and that it was better to invest the time mid-way through beta testing rather than waiting for a subsequent release. The issues addressed during the re-design that were most crucial were the areas of satisfaction and efficiency. While there is no question that the initial users of DREAM, even prior to Phase 1, were already enthusiastic about the potential of the tool, past experience has taught us that satisfaction in using a digital tool, in the longer term, has as much or more of an impact than the perceived value of the tool. That is, many users will stop using a tool that is difficult to navigate, even if the tool has perceived value (Buxton, 2007).

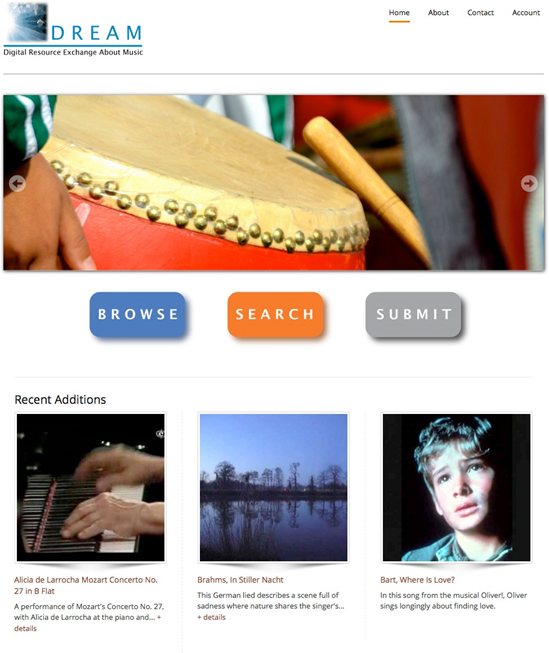

It was with great anticipation that the focus group began exploring the re-designed version of DREAM (v. 1.3). The most overwhelming response was that users were impressed with the new “look and feel” (see Figure 2). On the survey distributed to Phase 3 participants, one teacher wrote, “LOVING the new look!!!! Very clean and easy to navigate.” Another claimed that the improvements were “awesome.”

In addition to the teachers’ positive response to the look of the user interface, Phase 3 participants were also enthusiastic about the navigation of the tool. Not surprisingly, since they found the design much more efficient and satisfying, they began to assess the usefulness of the tool in terms of the quality of resources and whether the tool yielded the resources they were seeking. There were also positive responses regarding the quality of the resources themselves. One teacher participant wrote, “I just used DREAM in a lesson! Found three great sight- reading/ear-training apps quickly for summer practicing. And I have three very excited kids.” During the Phase 3 testing, with a stable platform in place, the development team systematically added hundreds of additional resources, increasing the usefulness of the tool to an even greater extent.

Some of the concerns raised in Phase 3 included confusion about categories, resulting in another re-working of the categories for the release version (see Table 3). These minor changes improved the effectiveness of the tool in terms of the search functions. The filters for refining searches were also modified as a result of Phase 3 feedback, to include instruments, performance level, genre, type of resource, and platform. However, difficulties with the search function persisted, and modifying the search became the primary focus of the final stages of design. Phase

3 participants also identified some stylistic suggestions, including font sizes and button placement, all of which were accomplished prior to the fourth and final stage of beta testing.

The final stage of beta testing was with the random sample of Canadian teachers described previously. These teachers completed an initial survey that helped us understand their professional context and technological savvy. On another national survey of independent music teachers (Upitis, Brook, & Abrami, in press), we asked similar questions. In comparing the demographics of the Phase 4 test participants with the national survey of independent music teachers, their professional profiles were similar but not identical. Like the national group, the Phase 4 participants had been teaching from anywhere to 5 years or less to over 40 years. However, 2% of the beta testers had been teaching for over 40 years, while in the national survey, the proportion of respondents who had been teaching for over 40 years was considerably higher at 12%. Conversely, 11% of the beta testers had been teaching for 5 years or less, while in the national sample, 7% had been teaching for 5 years or less. These figures indicate that a somewhat younger population responded to the call to beta test DREAM than the music teaching population as a whole. In other ways, however, their profiles matched: these teachers primarily work in the fields of piano and music theory, with voice as the next most popular teaching area at a distant third (20%). Studio sizes in both groups also varied in similar ways, with approximately 25% of the teachers having studios with fewer than 10 students; the bulk with 11 to 30 students, and another 25 to 30% with more than 30 students. Most students, for both groups, ranged between 7 and 17 years of age.

The two groups differed in terms of comfort with technology, with the beta testers appearing to be somewhat more comfortable with digital tools. In the national survey, 40% of the teachers reported being very comfortable with technology; in the beta testing group, this figure was closer to 50%. In the national survey, 7% said that they were uncomfortable with technology; not surprisingly, no one fell into this category in the beta testers. In both groups, close to 90% of the teachers reported having Internet access in their studios.

As noted previously, unlike the core testers and designers, these beta testers had no prior association with DREAM. Thus, sending them nothing more than the URL was a true test of how DREAM might first be received in the field. And while the beta testers differed in some ways from the field as a whole, the similarities between the two populations were much more striking, thus indicating that the ways in which the beta testers received DREAM is likely indicative of how DREAM would be received by the general population of independent music teachers.

As with the Phase 3 core testers who had experienced DREAM in its earlier versions, the Phase 4 participants reacted positively to the concept and design. Questionnaire comments included, “It was all very exciting,” and “It looks like a good tool for teachers to find resources without having to sift through hours of websites and trying to use the correct keyword for searches,” and, “It looks interesting. I already found some websites I want to explore.” The simplicity of the design was noted by many of the beta testers; they commented on the “clean” layout, and intuitiveness of the pages. A sample record page appears below:

None of the participants reported any confusion with the categories (see Table 3). There were however, issues related to the search function, where searches yielded irrelevant entries. One person reported a technical concern with logging in, and several respondents pointed out problems with some of the mobile interfaces. Some of participants wished for more resources for the platforms that they used most often, which were not the most popular platforms for most teachers and students. The project leaders and designers deemed that these technical site population issues could all be addressed without another major re-design.

Despite the promise of delivering a highly satisfying tool, it is important to note that a number of the participants raised issues related to the quality of the resources, echoing the comments made by stakeholders and the Phase 1 beta testers. The quality of the resources will ultimately determine the overall usefulness of the tool. Some participants said that they would welcome more reviews of the apps before exploring them further; others wondered if the resources would continue to be vetted so that the quality remained high. Still others asked if reviews could be provided by known and credible teachers. All of these issues need to be addressed in the on going maintenance of the tool, once the design parameters are solidified before final release. That said, the response to the idea and the tool, even in the beta version, already yielded some useful results to beta testers. One teacher commented, “Thank you for doing this. This kind of resource is something that will be of great value to students and teachers. The sites with Alexander Technique help were a lovely surprise, and something I will definitely be going back to.” Nearly all of the beta testers indicated that they intended to use DREAM in their teaching practices, and reported that they intended to tell colleagues and students about the tool.

Initial difficulties that teacher participants experienced with the navigation of DREAM were addressed in subsequent beta versions of the tool, which included a major change in design at the end of Phase 2, resulting in a new and cleaner interface design that was responsive to computer devices, tablets, and smartphones. With each new round of beta testing, the search mechanisms were improved and more resources were added by the participants and curriculum developers. By the end of the study, DREAM provided an attractive and useful tool for a range of teachers and was deemed ready for wide public release.

Conclusions

The results of the usability study give strong support for the conclusion that DREAM can serve as a centralized place for studio music teachers to keep abreast about digital technologies. Rubin and Chisnell (2008) remind us that the reason for carrying out this type of usability study is to ensure that products that are created are useful to and valued by the target audience (music teachers), easy to learn to use, help people be effective and efficient in terms of what they are attempting to achieve, and are “satisfying (and possibly even delightful) to use” (p. 21).

The results of the DREAM usability research give us every indication that DREAM will be valued by music teachers in terms of efficiency and effectiveness, and that it will be enjoyable and enticing to use, so long as there is a plan in place to continue to vet resources, provide reviews, thereby ensuring that only the highest quality resources are included. In a profession where many teachers experience isolation, it appears clear that DREAM has the potential to engage teachers in professional discussions as well as providing rich resources for their music teaching studios.

Appendix: Log Sheets and Final Usability Questionnaire

A. Log Sheets (used multiple times by Phase 3 and Phase 4 test participants)

How long did you spend using DREAM?

Less than 10 minutes

10 to 19 minutes

20 to 29 minutes

30+ minutes

What type of hardware did you use?

Computer

Tablet (Android)

Tablet (iPad)

Tablet (Windows)

Tablet (Blackberry)

Smartphone (Android)

Smartphone (iPhone)

Smartphone (Windows)

Smartphone (Blackberry)

Other (please specify) _______________________

What browser did you use?

Internet Explorer

Mozilla Firefox

Google Chrome

Safari

Other (please specify) ______________

What was your goal for this session using DREAM?

How easy was it to do what you wanted to do?

Not easy 1 23 4 5 6 7 Very easy

What was the most frustrating aspect of using DREAM?

What aspect of DREAM would you change to make your experience more enjoyable or useful?

Is there anything else you want to tell us?B. Final Questionnaire

Section 1: Type of Use

On what device(s) did you access DREAM? (Select all that apply.)

Computer

Tablet (Android)

Tablet (iPad)

Tablet (Windows)

Tablet (Blackberry)

Smartphone (Android)

Smartphone (iPhone)

Smartphone (Windows)

Smartphone (Blackberry)

Other (please specify) _______________________

How many times did you access DREAM in the past three weeks?

Fewer than 4 times

4 times

More than 4 times

On average, how long did you spend on the site each time you accessed it?

Less than 10 minutes

10 to 19 minutes

20 to 29 minutes

30+ minutes

Section 2: Navigation & Features

How easy was it to learn to use DREAM?

Not easy 1 2 3 4 5 6 7 Very easy

How easy was it to find specific information/resources?

Not easy 1 2 3 4 5 6 7 Very easy

What would have made it easier to learn to navigate DREAM?

What would have made it easier to find resources in DREAM?

8. How often were the recommended resources of interest to you?

Often

Sometimes

Rarely

Never

How many comments did you add to DREAM?

None

Fewer than 5

5 to 9

10 to 14

15 or more

Section 3: The DREAM experience

Overall, how enjoyable was it to use DREAM?

Not enjoyable 1 2 3 4 5 6 7 Very enjoyable

What would make DREAM more enjoyable to use?

How likely is it that you will continue to use DREAM?

Not likely 1 2 3 4 5 6 7 Very likely

Would you recommend DREAM to your colleagues? YesNo

Would you recommend DREAM to your students? YesNo

Could you find what you wanted quickly? YesNo

Overall, did you find the resources you were looking for?YesNo

How well did DREAM function on your device(s)?

Not very well 1 2 3 4 5 6 7 Very well

Is there anything else you would like to add?

Acknowledgements

The authors thank the classroom teachers and the independent music teachers who part in the research, as well as the project leaders, software developers, and designers. This work was supported by a partnership grant from the Social Sciences and Humanities Council of Canada (SSHRC), the Canada Foundation for Innovation (CFI), The Royal Conservatory, Centre for the Study of Learning and Performance at Concordia University, and Queen’s University.

References

Barnum, C. (2011). Usability testing essentials: read, set … test! London, UK: Elsevier. DOI:

Beckstead, D. (2001). Will technology transform music education? Music Educator Journal, 87, 44-49. DOI:

Burnard, P. (2007). Reframing creativity and technology: promoting pedagogic change in music education. Journal of Music Technology and Education, 1(1), 196–206. DOI:

Buxton, B. (2007). Sketching user experiences: getting the design right and the right design. Amsterdam: Elsevier.

Feldman, S. (2010). RCM: A quantitative investigation of teachers associated with the RCM exam process. Toronto, ON: Susan Feldman & Associates.

Partti, H. (2012). Cosmopolitan musicianship under construction. Digital musicians illuminating emerging values in music education. International Journal of Music Education. DOI:

Prensky, M. (2009). H. sapiens digital: From digital immigrants and digital natives to digital wisdom. Innovate 5(3). Retrieved from http://www.innovateonline.info/index.php?view=article&id=705

Rainie, L., & Wellman, B., (2012). Networked: The new social operating system. Cambridge, MA: MIT Press. DOI:

Rubin, J., & Chisnell, D. (2008). Handbook of usability testing: how to plan, design, and conduct effective tests (2nd ed.). Indianapolis, IN: Wiley Publishing, Inc.

Upitis, R., Brook, J., & Abrami, P. C. (in press). Independent music teaching in the 21st century: What teachers tell us about pedagogy and the profession. Proceedings of the Research Commission of the International Society of Music Education (ISME) World Conference, Porto Alegre, Brazil, July, 2014.

Waldron, J. (2013). YouTube, fanvids, forums, vlogs and blogs: Informal music learning in a convergent on- and offline music community. International Journal of Music Education, 31(1), 91–105. DOI:

Wise, S., Greenwood, J., & Davis, N. (2011). Teachers’ use of digital technology in secondary music education: illustrations of changing classrooms. British Journal of Music Education, 28(2), 117–134. DOI:

Yadrich, D. M., Fitzgerald, S. A., Werkowitch, M., & Smith, C. E. (2012). Creating patient and family education web sites: assuring accessibility and usability standards, Computers, Informatics, Nursing, 30(1), 46–54. DOI:

Copyright information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.